POL 304: Using Data to Understand Politics and Society

Big Data

Olga Chyzh [www.olgachyzh.com]

Assigned Readings

Danah Boyd and Kate Crawford. 2011.Six Provocations for Big Data. A Decade in Internet Time: Symposium on the Dynamics of the Internet and Society.*

David Lazer et al.2014. The Parable of Google Flu: Traps in Big Data Analysis.

Aylin Caliskan, Joanna J. Bryson, and Arvind Narayanan. 2017. Semantics Derived Automatically from Language Corpora Contain Human-Like Biases. Science 356(6334):183--186,

Jevin D. West. 2010. How to Improve the Use of Metrics: Learn from Game Theory. Nature 465(17):870--872.

Type of Scientific Inference

Deduction: “top-down” reasoning whereby, if the premises are held to be true (and the terms in use precisely specified), then the conclusion follows as a logical necessity, based on the reductive application of laws to a closed domain of discussion.

- Example. The Earth is round and revolves around the Sum. Therefore, the Sun rises in the East.

Induction: “bottom-up” reasoning, whereby a conclusion is reached via generalization or extrapolation from initial information.

- Examples: The Sun rose in the East yesterday and today. Therefore, the Sum must always rise in the East.

Is Big data more amenable to deductive or inductive inference?

Induction to the Extreme

"This is a world where massive amounts of data and applied mathematics replace every other tool that might be brought to bear. Out with every theory of human behavior. [...] Who knows why people do what they do? The point is they do it, and we can track and measure it with unprecendented fidelity. With enough data, the numbers speak for themselves."

-- Anderson (2008)Induction to the Extreme

"This is a world where massive amounts of data and applied mathematics replace every other tool that might be brought to bear. Out with every theory of human behavior. [...] Who knows why people do what they do? The point is they do it, and we can track and measure it with unprecendented fidelity. With enough data, the numbers speak for themselves."

-- Anderson (2008)Is this praise or a critique?

Induction to the Extreme

"This is a world where massive amounts of data and applied mathematics replace every other tool that might be brought to bear. Out with every theory of human behavior. [...] Who knows why people do what they do? The point is they do it, and we can track and measure it with unprecendented fidelity. With enough data, the numbers speak for themselves."

-- Anderson (2008)Is this praise or a critique?

Do numbers speak for themselves?

Discussion Questions

What is Big Data? What is Small Data?

What are the unique challenges of analyzing Big Data?

In what way are the "four forces"---the market, the law, social norms, and code---at odds when it comes to Big Data?

Market: The market sees Big Data as pure opportunity: marketers use it to target advertising, insurance providers want to optimize their offerings, and Wall Street bankers use it to read better readings on market temperament (Boyd and Crawford, 2).

Law: Legislation has already been proposed to curb the collection and retention of data, usually over concerns about privacy (Boyd and Crawford, 2).

Norms: Features like personalization allow rapid access to more relevant information, but they present difficult ethical questions and fragment the public in problematic ways (Boyd and Crawford, 2).

Code: Social and cultural researchers have a stake in gaining access.

A New Definition of Knowledge?

What are the parallels between Fordism and Big Data? Is this a good thing?

Do Big Data dictate what questions we ask? Why? Does it have to? Consider your choice of question for Assignment 2 as an example.

- Ford's innovation was using the assembly line to break down interconnected, holistic tasks into simple, atomized, mechanistic ones. He did this by designing specialized tools that strongly predetermined and limited the action of the worker. Similarly, the specialized tools of Big Data also have their own inbuilt limitations and restrictions (Boyd and Crawford, 4).

Objectivity and Accuracy

Are Big Data biased? In what way? Why? Do biased data lead to biased answers? Do unbiased data lead to unbiased answers? How can you get unbiased answers from biased data?

Why are Big Data and "whole data" not the same? What are the implications?

Under what conditions does Bigger Data mean better data? When does it not?

What measurement issues should a Big Data scientist watch out for (vs. a small data scientist)?

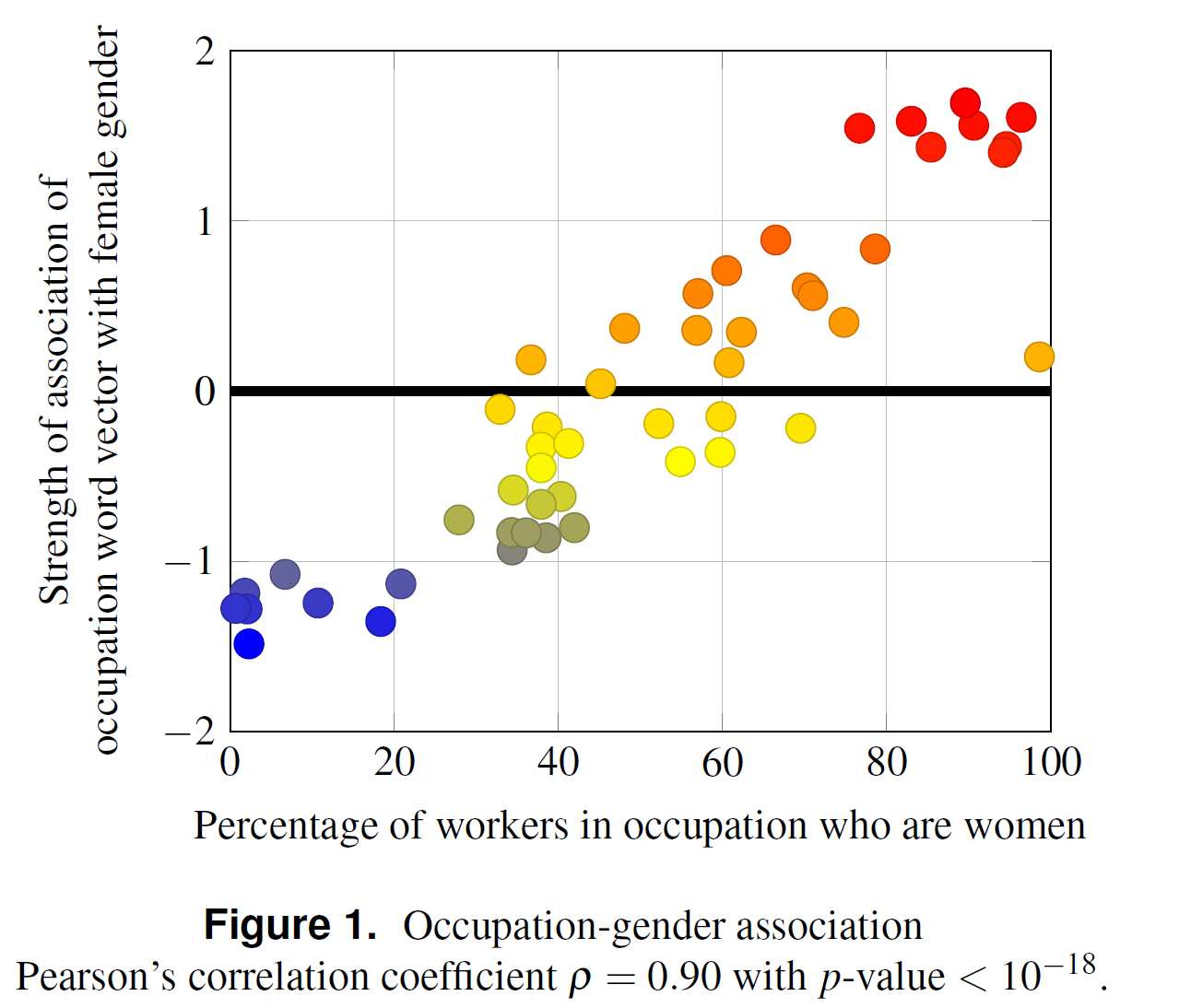

What is the IAT test? What is the Word Embedding Association Test (WEAT)?

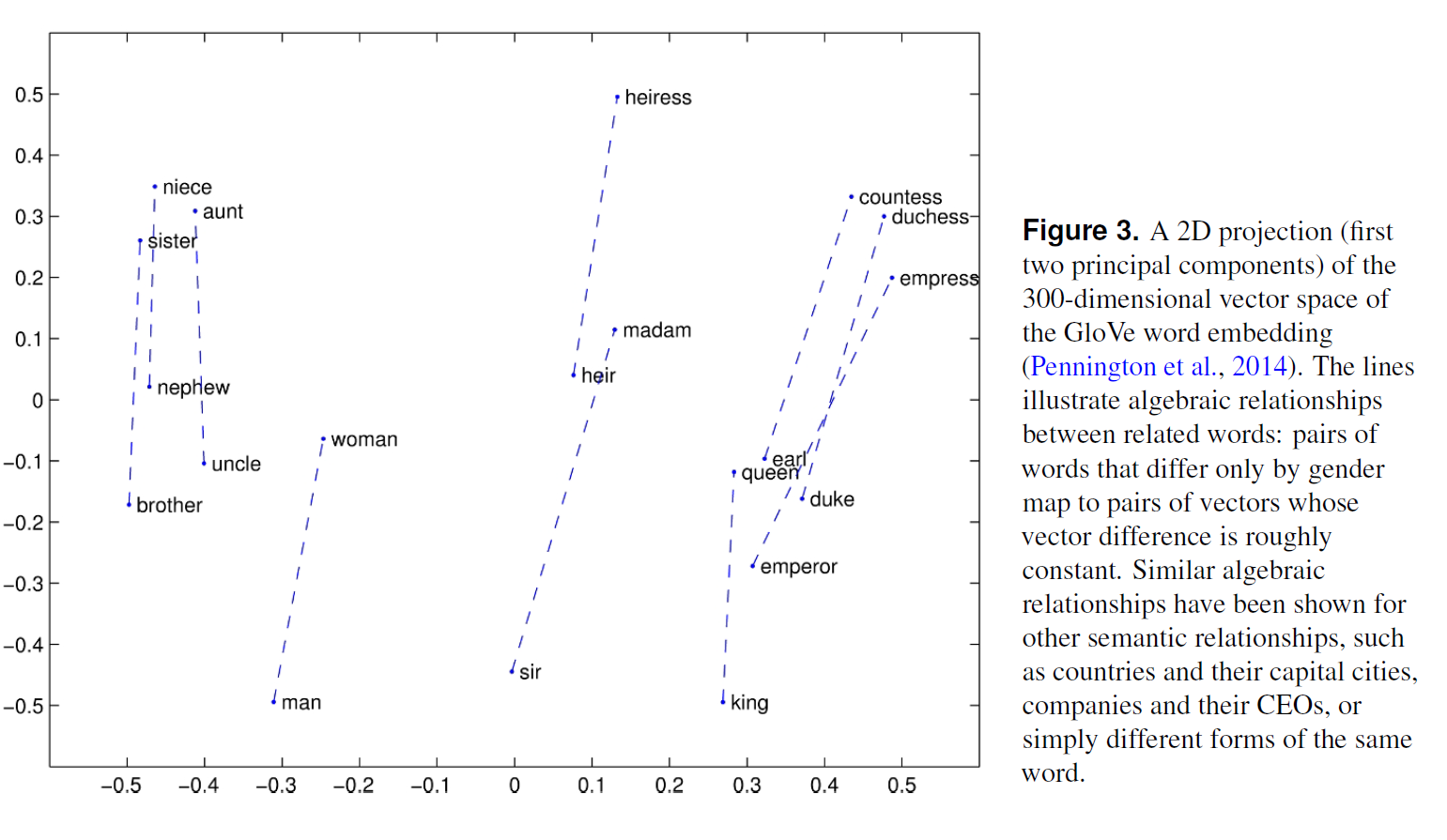

Roughly, for each word, its relationship to all other words around it is summed across its many occurrences in a text. We can then measure the distances (more precisely, cosine similarity scores) between these vectors. The thesis behind word embeddings is that words that are closer together in the vector space are semantically closer in some sense. Thus, for example, if we find that programmer is closer to man than to woman, it suggests (but is far from conclusive of) a gender stereotype.

Caliskan et al (2017)

What corpora do Caliskan et al (2017) use? What evidence of Big Data bias do they find?

How can we reduce these biases?

Why is This a Problem?

Ethical Research

What is the balance between data transparency and privacy?

How can we anonymize the data while preserving its usability?

Should public data be fair game? Under what conditions?

What is research accountability?

What is the "new digital divide"?

Google Flu Tracker

How can Google searches predict flu cases?

What are the similarities between GFT and the model used in the "Bestseller Code"?

Why did GFT miss the 2009 H1N1 pandemic?

What is "big data hubris"?

How did GFT fare on research transparency and reproducibility?

How can we use the lessons from GFT failure to improve predicting trends moving forward?

“Big data hubris” is the often implicit assumption that big data are a substitute for, rather than a supplement to, traditional data collection and analysis.

Lessons from Game Theory

What is the problem described in the article? What recommendations did it suggest for addressing it? Does the problem/solutions apply beyond the academe, e. g., venture capitalism, industry, government?

How can we apply the strategies proposed in the article to help improve the quality of Big Data research?

Using Correlations to Specify a Model

Download the Global Terrorism Dataset and its codebook from the course website.

Load the data (I recommend using function

freadfrom thedata.tablepackage to speed up loading).

#install.packages("data.table")library(data.table)mydata<-fread("./data/GTD_data_small.csv", header=TRUE)cor(mydata$nkill,mydata$iyear, use="complete.obs")## [1] 0.01536565Your Turn

Use the

corfunction to find the top 10 variables correlated withnkill. See?corfor help.Regress

nkillon the variables you identified (use thelmfunction).What is the R2 of your model? What does this model tell you about the number of casualties in terrorist attacks?

Discussion

- Regress nkill of the variables with the highest correlation to it:

## ## Call:## lm(formula = nkill ~ nwound + nkillus + nkillter + nhostkid + ## nreleased + nwoundte + suicide, data = mydata)## ## Residuals:## Min 1Q Median 3Q Max ## -80.85 -1.74 -1.62 -0.63 336.49 ## ## Coefficients:## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 1.592257 0.145419 10.949 < 2e-16 ***## nwound 0.124067 0.006334 19.587 < 2e-16 ***## nkillus 0.014002 0.051053 0.274 0.784 ## nkillter 2.167832 0.142814 15.179 < 2e-16 ***## nhostkid 0.038977 0.002842 13.715 < 2e-16 ***## nreleased -0.013208 0.003117 -4.238 2.29e-05 ***## nwoundte -0.845000 0.132927 -6.357 2.19e-10 ***## suicide 12.146725 1.413918 8.591 < 2e-16 ***## ---## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1## ## Residual standard error: 11.35 on 6934 degrees of freedom## (184522 observations deleted due to missingness)## Multiple R-squared: 0.8191, Adjusted R-squared: 0.8189 ## F-statistic: 4484 on 7 and 6934 DF, p-value: < 2.2e-16